Artificial intelligence has made incredible strides in recent years, but one challenge has continued to hold robots back from truly operating like humans in the physical world. While humans instinctively combine sight and touch to interact with objects, most robots still rely heavily on vision alone. A breakthrough from international researchers is changing that narrative by teaching robots how to feel and see at the same time, unlocking more natural and precise object handling.

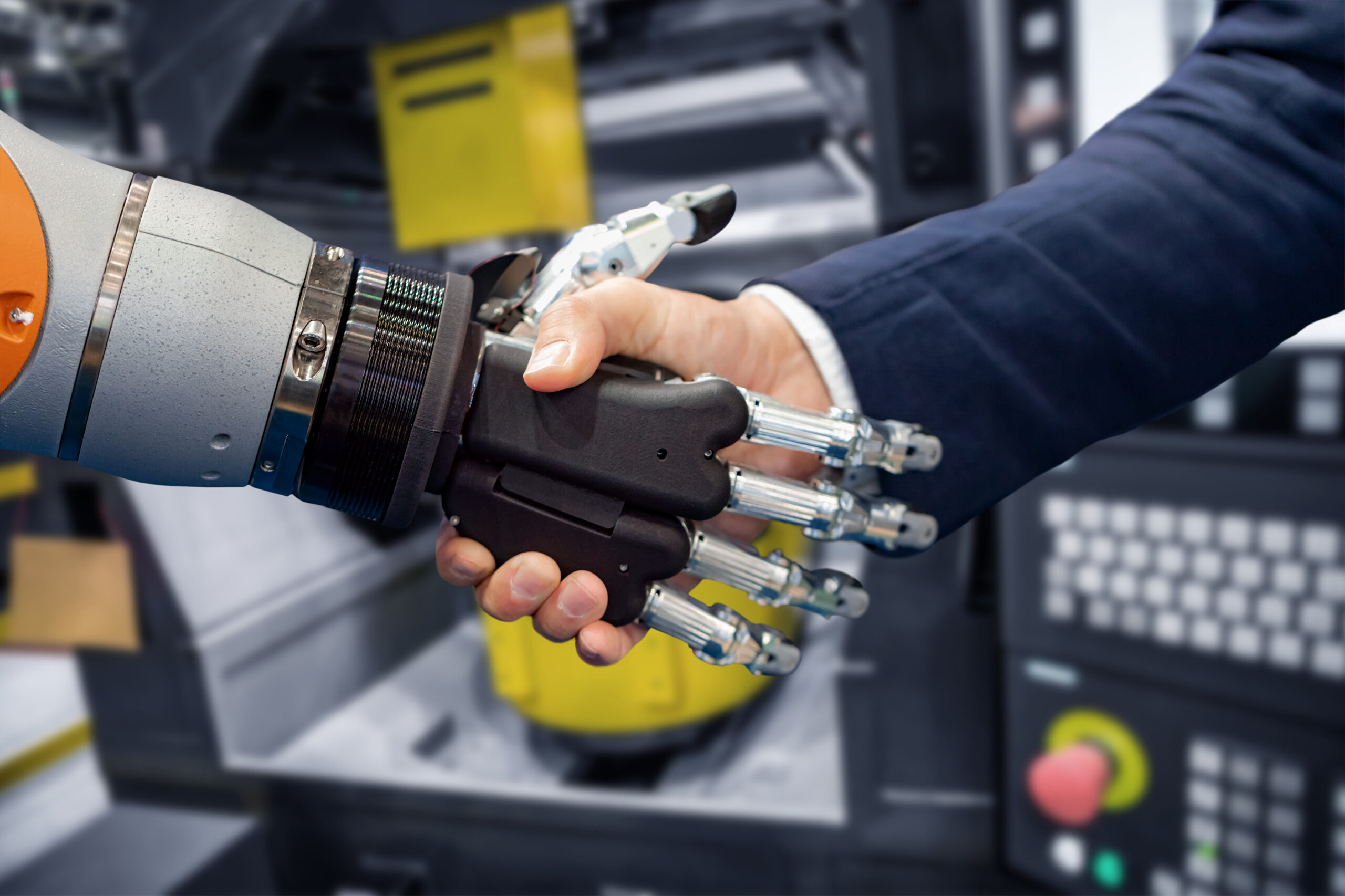

This advancement brings us closer to physical AI systems that can assist with everyday human tasks, from household chores to caregiving.

Why Vision Alone Is Not Enough for Robots

For humans, picking up a coffee cup is effortless. We judge distance visually and adjust our grip through touch, all in real time. Robots, however, have traditionally depended on cameras and visual data to perform similar actions. While effective in controlled environments, vision-only systems struggle with objects that look similar but feel very different.

Materials like Velcro or zip ties are perfect examples. Their functionality depends on texture, adhesiveness, and orientation details that are difficult to interpret visually. Without tactile feedback, robots cannot reliably determine which side is which or how much force to apply. This limitation has prevented robots from handling complex, real-world objects with confidence.

Introducing TactileAloha and Multimodal Physical AI

To overcome these challenges, researchers developed a new system called TactileAloha. Built on the foundation of Stanford University’s open-source ALOHA robotic platform, this system integrates both visual and tactile information using advanced vision-tactile transformer technology.

By combining what the robot sees with what it physically feels, TactileAloha enables robotic arms to adapt their movements dynamically. According to Mitsuhiro Hayashibe, Professor at Tohoku University’s Graduate School of Engineering, this approach allows robots to make decisions based on texture and physical feedback, something vision alone cannot achieve.

The result is a more flexible and human-like robotic control system that significantly improves task success rates compared to conventional vision-based AI.

Real-World Performance and Everyday Applications

In practical tests, robots equipped with TactileAloha demonstrated impressive performance when handling challenging objects. Tasks involving Velcro and zip ties, where front-back orientation and adhesiveness are critical, were completed with greater accuracy and adaptability.

This breakthrough has wide-reaching implications. Robots that can reliably integrate sight and touch could assist with cooking, cleaning, industrial assembly, and even caregiving. By forming responsive movements based on multiple sensory inputs, these systems move beyond rigid automation and toward intelligent collaboration with humans.

Research like this signals a future where robots are not just tools but capable helpers in everyday environments.

A Major Step Toward Human-Like Robotic Intelligence

The development of TactileAloha represents a significant milestone in multimodal physical AI. By processing multiple senses simultaneously, robots can now interact with the world in a way that more closely resembles human behavior.

As this technology continues to evolve, the line between human and robotic capabilities in physical tasks will continue to narrow. Innovations like TactileAloha bring us one step closer to seamless, intelligent robotic assistants becoming part of daily life.

Source: Neuroscience News